Containers, VMs, and Serverless These are three of the terms that many, in the Cloud Computing and IaaS sector, have been examining for some time. Their constant interest has a common thread that can be summarized in a question: What's the best way to deploy and manage applications in a cloud environment?

If you, too, are looking for the answer to this question, this article will give you an idea of what approaches most companies currently use.

If traditionally every application was closely connected to the server and the operating system on which it ran, over the last few years, and thanks to the advent of large cloud providers, this dependence has been reduced by simplifying its implementation: VMs, Containers, and Serverless They represent different approaches that make it possible to loosen the close ties used in traditional IT environments.

We can consider the transition between these approaches as a real one evolution cloud computing environments. Thanks to their implementations, in addition, many other methodologies have emerged to execute the release of applications, such as the DevOps methodology.

The first step: Virtual Machine

The first step in this evolution sees as protagonists the VMS (Virtual Machine), i.e. operating systems installed on “virtual” hardware completely isolated from each other, which have the task of abstracting a complete hardware environment, with the aim of making the entire stack transportable and reproducible, starting from the operating system to the application.

How does a VM work? It always starts with the hardware (the server) on which a particular type of program is installed, called Hypervisor (for example Openstack, VMware, Xen, etc.) to which it is responsible for providing and managing a complete “virtual” hardware stack (CPU, Storage, Network, etc.), on which the user can install the operating system that best suits the execution of their application.

Through the use of VM, it is possible to run, on the same physical server, several operating systems simultaneously (Windows, Linux, etc.) completely independently, effectively increasing the efficiency of the entire system.

VMs therefore offer the significant advantage of be able to consolidate applications on a single server Or on same physical infrastructure, this certainly translates into a significant increase in efficiency and suppleness compared to a traditional, non-virtualized approach.

On the other hand, since each VM has its own operating system, it is necessary to create and use an image closely linked to it. In terms of efficiency, this choice proves to be valid compared to the traditional approach in which a server can host only one Operating System, while remaining a non-optimal solution precisely because of the amount of resources used, exclusively, by each VM for the sole execution of its OS. The choice of VMs as an approach, however, remains useful for legacy applications that require complete control over the environment.

But for new applications, what's the solution?

What are the containers?

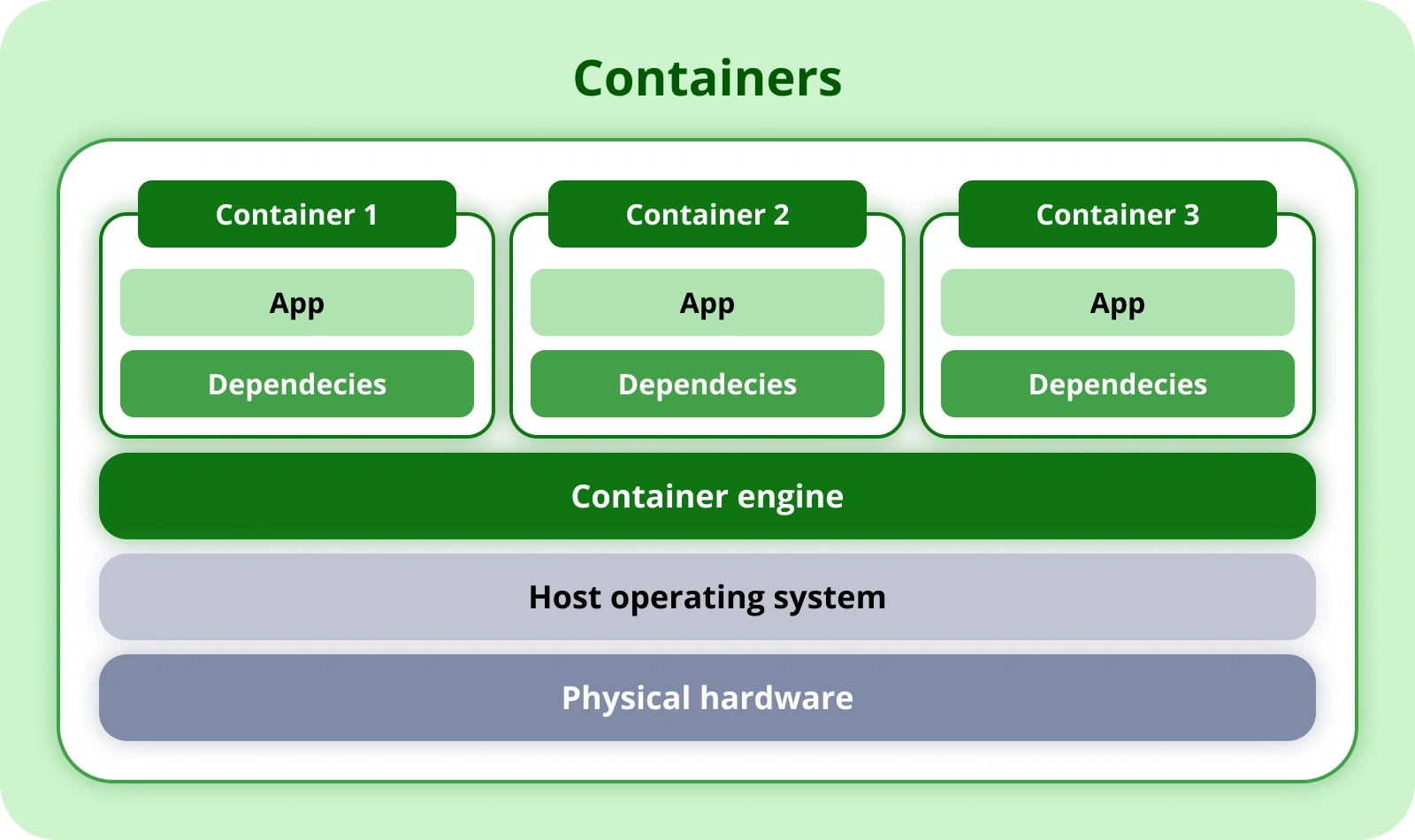

While virtual machines are responsible for virtualizing the entire Operating System, Container they provide virtualization that uses part of this as a common substrate between them. This means that more Containers Do they share the OS kernel, providing the user with the possibility to generate small images that are able to use the libraries of the Operating System in which they run, without the need to incorporate them, reducing overhead costs and increasing the speed of distribution and portability.

Containers, in fact, being lighter units of application isolation, include only the libraries and files necessary to run the single application that composes them, thus making them faster and more efficient than VMs. Choosing to use Containers to distribute our application provides guarantees of portability and repeatability that wouldn't be possible with VMs alone.

Even theUpdate, and the related rollback in case of problems, they turn out to be significantly simplified. Once a container has been run with the modified version of the image, to update it, it will be enough to destroy it and then execute a new one with the previous version of the image, thus making an immediate rollback.

The concept behind this technology is that of immutability of the image understood as: each image contains only and exclusively the files, packages and libraries that are used to run the application and cannot be modified. The changes, as mentioned, must be made on a new image that will replace the first one. With this in mind, Containers lend themselves perfectly to the CI/CD paradigm, allowing access to a logic of minimal and continuous modification, accelerating the development of new features and the resolution of bugs.

I container (Alas) They are not perfect. In fact, since they are dynamic and flexible by nature, it is also difficult to isolate them from each other on the same kernel, precisely because of their main characteristic: sharing “parts” of the Operating System on which they run.

Serverless Model

With the Serveless the need to manage the infrastructure underlying the application is eliminated.

Concretely, in this model it is possible to write the code, set some configuration parameters and feed the entire application to a service of Automation, with the ultimate goal of having an operational and functioning environment without knowing the actual underlying infrastructure.

Serverless is a particularly good approach useful for relieving developers from most of the heavy lifting, such as allocating storage space and creating networking policies. All routine tasks for provisioning, maintaining and scaling the infrastructure are delegated to a service cloud provider. The 'server' resources that the applications need are create and allocate automatically, while the code is created in packages and inserted inside the container itself for deployment.

Unfortunately, even Serverless has its flaws: In fact, it requires developers to rethink the way in which they create applications. Its approach so different from other traditional methods involves training and careful study that is often specific to cloud providers. An example of serverless can be found applied in what are the AWS Lambda Functions.

To recap: from VMs, through Containers, to Serverless

We can say that, until now, the evolution between these approaches has had a specific orientation to simplification And to theautomation:

- from VMs to containers: the use of containers has simplified the distribution and management of applications, making the use of resources more efficient and increasing scalability;

- From containers to serverless: Serverless solutions have further simplified application management, eliminating the need to manage the underlying infrastructure, allowing developers to focus only on code.

However, in this evolution, one element that deserves to be highlighted and to stand out, is definitely Kubernetes.

Kubernetes is, in fact, the currently most advanced orchestration 'platform' and allows the management of all the cases just mentioned. In fact, there are projects such as KubeVirt that allow you to orchestrate VMs within Containers and projects such as Knative, which allow K8S to be used as a Serverless platform.

Clearly, in this 'evolutionary diagram', Kubernetes appears, from the point of view of the application developer, as the last act that incorporates all the advantages of the previous points but adds a disadvantage related to management complexity, which in turn integrates issues related to DevOps and the need to rely on more DevOps Oriented figures.

What would you choose between VM, Container and Serverless?

The answer should be:”Depends on the application”.

Each of these options has its purpose and limitations. For this reason, the choice between VM, container and Serverless will depend on the specific needs of the application and of the development and management operations. Often, a hybrid approach that exploits each of these technologies as needed may be the best solution for an organization.